Your Next Stop: The Twilight Zone

Picture, if you will, an organization that operates a legacy software system. A codebase of interconnected dependencies and functions that in its grasp holds the digital heartbeat of their business.

Management, pressured by deadlines, cost savings, and awed by the latest technological promises of ultimate efficiency through artificial intelligence, decides to augment their development teams not with skillful engineers, but with AI prompt engineers. A decision that seems, on paper at least, to be technologically sound and fiscally responsible.

They’re about to learn the true cost of substituting experience with algorithms is not measured in mere dollars and cents. For in this case, the price to be paid, will be in a far more precious currency: the accumulated wisdom of human experience itself.

The Simulations

AI as the Solution Provider

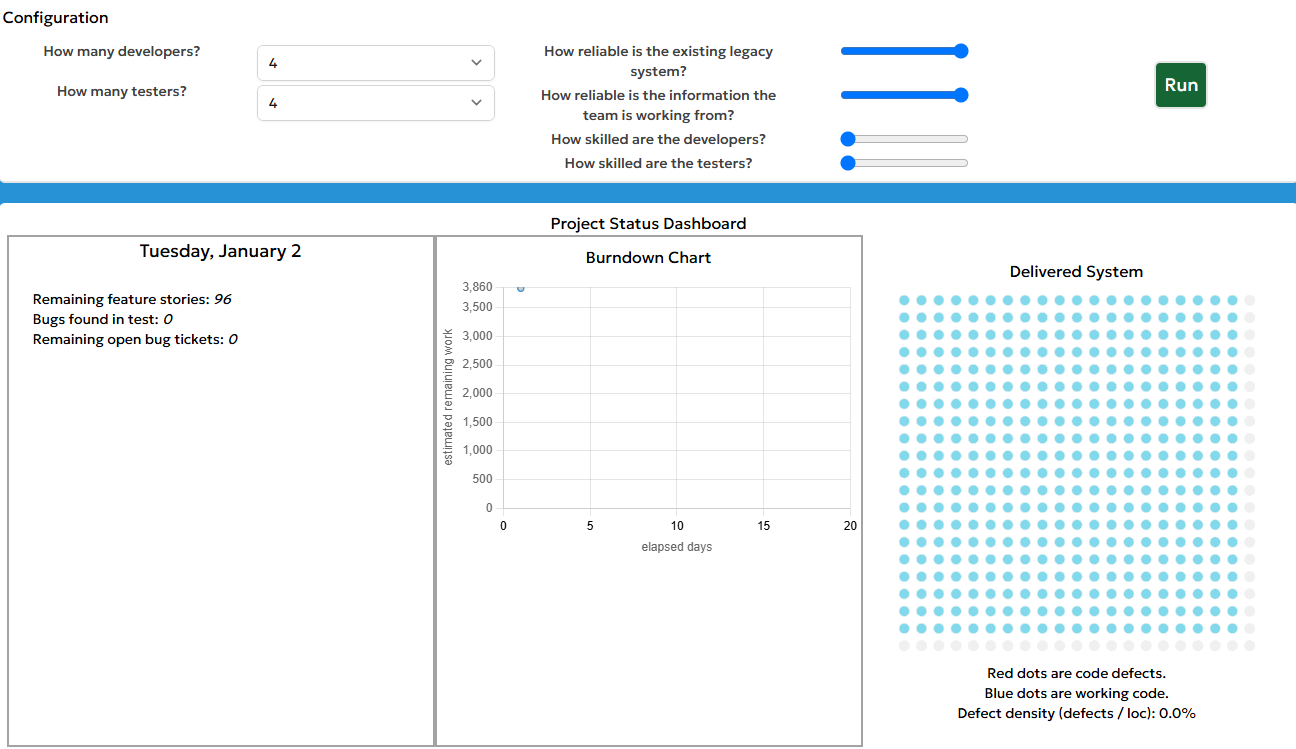

Thanks to Elisabeth Hendrickson [1] and her creation of the DuckSim Sandbox [2], we are able to adjust the dials of a given system of work and run simulations. We begin with setting the conditions and making some decisions.

Conditions:

A highly reliable legacy software system

Information that is of the highest reliability and easy to access

Goal: Add 96 “Feature Stories”

Simulation time-frame is 1 year

Initial bug count, 0

Initial defect density, 0.0%

Decisions:

Augment 4 developers with 4 AI prompt engineers

Augment 4 testers with 4 AI prompt engineers

As we move forward, it is important to define what is meant by “AI prompt engineer” for the context of this article.

AI prompt engineers meaning no previous knowledge or understanding of software product development or quality testing. Just using AI prompting.

As the simulation runs, think about “What observations can be made from this?”

We begin the simulation.

One month:

20 “Feature Stories” completed out of 96

7 bugs found

7 open bug tickets remain

Defect density: 6.25%

3 months:

52 “Feature Stories” completed out of 96

45 bugs found

45 open bug tickets remain

Defect density: 15.0%

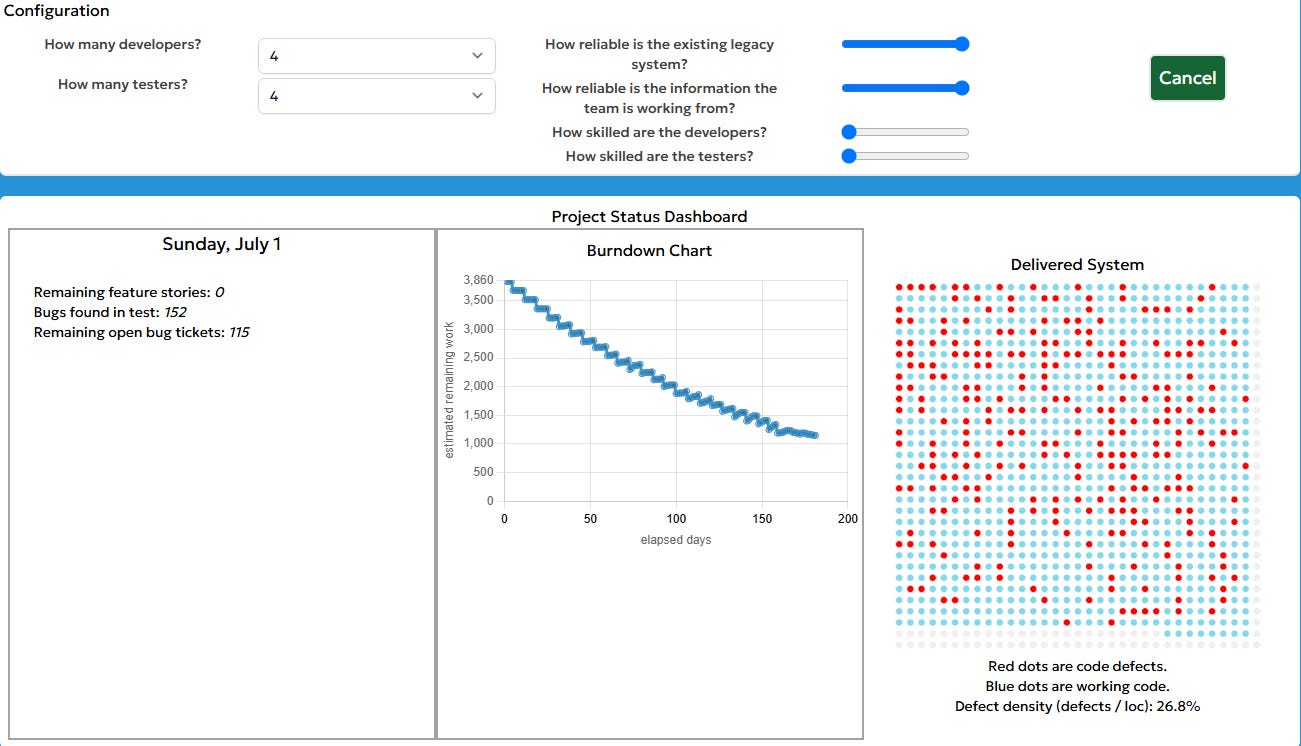

6 months:

96 “Feature Stories” completed out of 96

152 bugs found

115 open bug tickets remain

Defect density: 26.8%

1 year:

96 “Feature Stories” completed out of 96

616 bugs found

208 open bug tickets remain

Defect density: 48.2%

Failure Demand

With each new “Feature Story” completed or “Bug” fixed, new problems are generated. The lack of deep system understanding and reliance on AI suggestions results in surface level solutions that compound complexity, creating a spiral where maintenance generates more maintenance.

At year-end, the burndown curve has reversed. A signal that the system has become erratic and unmaintainable. The promised efficiency gains of AI-driven development masks the critical loss of system comprehension.

Causal Modeling

Since we will be looking at causal models, we should first have some basic understanding of the bits that make up causal loops. All of these ideas with causal models are centered around helping us see the same reality and tell the story of our systems. Once we have this, we are better able to make decisions and begin to steer out systems.

The ovals are the nouns / variables of the system. These can and do change over time. Either increasing or decreasing depending on the relationship.

The arrows are the links showing causal relationships. Meaning a change in the first variable results in a change in the second variable.

We will use a + to show amplifying relationships that go in the same direction.

We will use a - to show dampening relationships that go in opposite directions.

We will use a half-white / half-gray box to indicate a decision point where the receiving noun / variable may move in the same or opposite direction from the originating noun / variable based on the interventions type.

We will use | | to show a time delay. When you see this, it just means there is not an immediately visible change in the system.

Here are a couple of example causal loops to think through that shares the basic idea.

Walking through the first loop, the story goes:

As the Mice Population goes up, the Coyote Population goes up. Notice the + amplifier indicating these two variables are traveling in the same direction.

As the Coyote Population goes up, the Mice Population goes down. Notice the - dampener showing the inverse relationship.

If we continue around the loop, since now the Mice Population is going down, the Coyote Population is going down. And finally as the Coyote Population goes down, the Mice Population goes up.

This loop is balancing, self-correcting, and producing a stable system.

Walking through the second loop tells a different story:

As the Savings Account goes up, the Interest Accrued goes up. As the Interest Accrued goes up, the Savings Account goes up. There is nothing to balance out this cycle creating a reinforcing loop.

There is far more to causal modeling than what we are using for these examples, but the idea here is to have some understanding of the basics and be able to follow the causal models to follow in this article.

A future article will explore causal modeling more in depth.

Modeling with Causal Loops (AI as the Solution Provider)

Let’s begin by modeling the conditions and decisions of the legacy software system of work.

The initial state is high quality information meaning deep understanding of system behavior is at the least available to be learned. The system is reliable meaning it is behaving predictably and is stable.

When low-skill teams make changes solely relying on AI suggestions, they don’t fully grasp the behavior implications. These changes disrupt existing system patterns while new behaviors emerge that weren’t intended.

Bugs are identified by immediate behavior changes. While reliability issues are the result of more subtle behavior changes. Both bugs and reliability issues make the system behavior less predictable. The understanding of the system behavior becomes less accurate over time, making future changes even riskier.

Modeling this system of work exposes the reinforcing loop revealed by the simulation.

This model shows that each cycle through amplifies the next. Explaining the accelerating defect density in the simulation.

As AI suggested development changes for features and bugs are introduced without contextual understanding, symptoms are being resolved rather than problems. These surface level solutions add layers of workarounds and increase the amount of convoluted code.

The system complexity grows as system behavior becomes more difficult to understand. The initial highly reliable and accessible information becomes less useful and effective testing decreases.

The knowledge gap of the system expands due to unexpected consequences from the changes. And this incomplete understanding multiplies bugs.

The defect rate increases leading to more bugs needing to be fixed and more time spent on maintenance instead of value adding work.

Maintenance work becomes the primary focus. Increased pressure to fix bugs quickly leads to an increased reliance on AI to solve the problems. Completing the reinforcing loop.

Skilled, Experienced People as the Solution Provider

Inverting the system of work provides further insights. We once again begin by setting the conditions and making some decisions.

Conditions:

A legacy software system of the lowest reliability

Information that is of the lowest reliability and quality

Goal: Add 96 “Feature Stories”

Simulation time-frame is 1 year

Initial bug count, 0

Initial defect density, 9.25%

Decisions:

4 Developers of the highest skill level

4 Testers of the highest skill level

(Not using AI prompting.)

Again, as the simulation runs, think about “What observations can be made from this?”

We begin the simulation.

One month:

16 “Feature Stories” completed out of 96

13 bugs found

13 open bug tickets remain

Defect density: 14.25%

3 months:

52 “Feature Stories” completed out of 96

52 bugs found

52 open bug tickets remain

Defect density: 14.81%

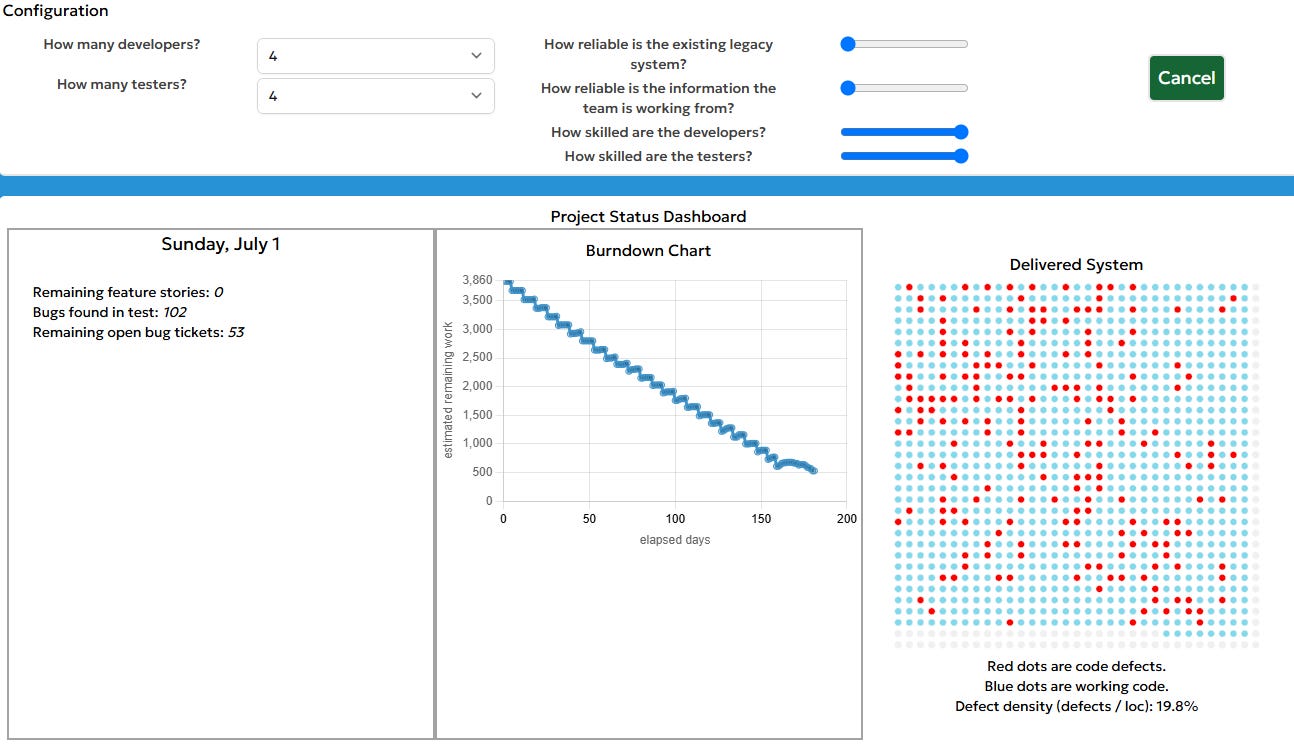

6 months:

96 “Feature Stories” completed out of 96

102 bugs found

53 open bug tickets remain

Defect density: 19.8%

8 months:

96 “Feature Stories” completed out of 96

164 bugs found

0 open bug tickets remain

Defect density: 14.5%

Failure Demand

While this legacy system offered the worst in reliability and information, the skilled team maintains steady progress. With each “Feature Story” completed or “Bug” fixed, the software systems stability increases. Deep experience enables solving problems at the root rather than the surface level patches.

The simulation stops at eight months as there are no more new features being requested, and the bugs have been resolved.

In comparison to the previous burndown, this shows consistent progress without a reversal. The defect density has stabilized between 14-15%, reflecting the team’s ability to understand and manage the system complexity. Experience has transformed potential failure demand into a sustainable maintenance work load and the value adding software could continue at a greatly reduced risk.

Modeling with Causal Loops (Skilled, Experienced People as the Solution Provider)

Once again, let’s begin by modeling the conditions and decisions of the legacy software system of work.

The initial state has an unreliable system with low quality information, but highly skilled developers and testers. Despite the poor information quality, skilled teams are still able to effectively work with the system. The collective experience increased the probability of discovering bugs and reliability issues before they can impact the systems reliability.

The skills of the developers and testers act as a counterbalance to the system’s initial poor state. Having the expertise allows them to work effectively even with low quality information, while simultaneously improving system understanding.

Modeling the system of work exposes the reinforcing loop revealed by the simulation.

Looking at this model, we see that each cycle through strengthens the system’s stability. Which explains the stabilization of defect density in the simulation.

When skilled and experienced developers and testers implement changes to the system focused on root level solutions rather than quick fixes, the solutions improve system stability while expanding knowledge and understanding.

The defect rate decreases as this knowledge and understanding expands and testing becomes more effective. Ultimately allowing the focus to be on value-adding work instead of constant firefighting.

Value-adding work reinforces the team’s ability to apply their skills effectively and completes the reinforcing loop that leads to system improvement.

Summary

There are many things that can be taken from this article.

Through some really excellent modeling tools provided by Elisabeth Hendrickson [1], her incredible work with systems thinking, and her creation of the DuckSim Sandbox [2], coupled with some systems modeling, a stark truth begins to emerge. Organizations cannot simply substitute human expertise with AI in software development.

AI augmented teams lacking experience took a reliable system, and after 1 year drove it into chaos with a 48.2% defect density, skilled developers and testers took a chaotic system, and after 8 months achieved stability with a 14.5% defect density. The key difference is experience.

When talking about experience, it is more than writing code or fixing bugs - it’s about understanding system behaviors, recognizing patterns, and solving root level problems.

The simulations reveal what many experienced people intuitively know: there is no algorithmic shortcut for the deep understanding that comes from years of hands-on experience. AI can be a powerful tool in the hands of skilled people, it cannot replace the human expertise needed to maintain and evolve complex software systems.

In the rush to embrace AI’s promise of faster, cheaper, and greater efficiency, organizations risk trading their most valuable asset - human experience and knowledge - for a mirage of progress. Not just trading human wisdom for algorithmic shortcuts or degrading code, but dismantling decades of hard-won understanding.

The price to be paid is measured in the gradual erosion of system stability, reliability, and maintainability, among many other issues, not discussed in this article.

Questions

How many other organizations are quietly, or not so quietly, trading their accumulated wisdom for the promise of algorithmic efficiency?

What other forms of deep understanding are we risking in the name of innovation?

And perhaps most importantly, when did we start believing that experience - that precious currency of human insight - could be replicated by prompts and parameters?

References

[1] Hendrickson, Elisabeth - Advisor. Coach. Speaker. Author. Interim / fractional VP Eng. / Quality.

https://www.linkedin.com/in/testobsessed/

[2] Hendrickson, Elisabeth - DuckSim Sandbox, © 2024 Curious Duck Digital Laboratory, LLC

[3] Repenning, Nelson P. and Sterman, John D., 2001, “Nobody Ever Gets Credit for Fixing Problems the Never Happened: Creating and Sustaining Process Improvement

[4] Tosi, Joel, Systems Thinking Workshop - Visualizing Relationships and Knowing Where to Act

[5] Tosi, Joel, Hendrickson, Elisabeth, and Pipito, Chris., 2025 “Engineer Your System”